Semantic Search using OpenAI Embedding and Postgres Vector DB in NodeJS

This project is about implementing semantic search using OpenAI embedding and Postgres vector database in NodeJS. Semantic search is a search technique that uses natural language processing to understand the meaning of the query and returns results that are semantically related to the query. OpenAI embedding is an API that will convert text into a numerical vector representation that can be used for semantic search. Combining it with the Postgres vector database we can use this to make a semantic search of any text or article we want.

For the example text, I choose https://jamesclear.com/why-facts-dont-change-minds for the article.

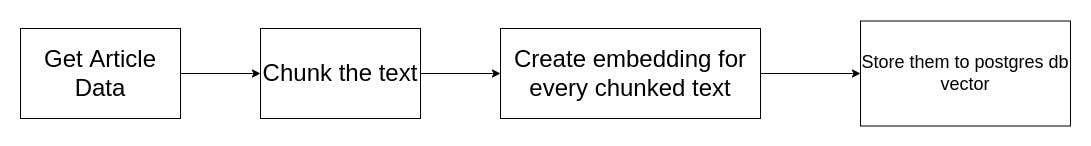

Diagram

Here is the diagram for the API that we want to create. It is split into two functions.

- Create and store the embedding based on the article text

Search the queried text

Prerequisite

Database

For the Postgres database, we will be using Supabase. Supabase has a free tier, and we can create and use it instantly.

Let's install the extension for Postgres, open the Supabase SQL editor, and run:

create extension pgvector;

Notes: If this extension needs another extension to install, install that also.

Library

For this project, we use some of the node-js libraries:

"@supabase/supabase-js" // supabase node-js client

"dotenv"// to read .env file

"express" // we use express for rest api framework

"gpt-3-encoder" // we need this for chunk the text

"openai" // openai node-js client

npm install @supabase/supabase-js dotenv express gpt-3-encoder openai

ENV

For the environment variable, we need these 3 for this project:

SUPABASE_PROJECT_URL=

SUPABASE_SECRET_KEY=

OPENAI_API_KEY=

Get SUPABASE_PROJECT_URL and SUPABASE_SECRET_KEY (service_role secret) from creating a database from Supabase first, and go to API Settings.

The OPENAI_API_KEY you can get it here.

Code

Our Project structure will look like this:

node_modules

.env

article.txt

embed.js

openAi.js

package.json

server.js

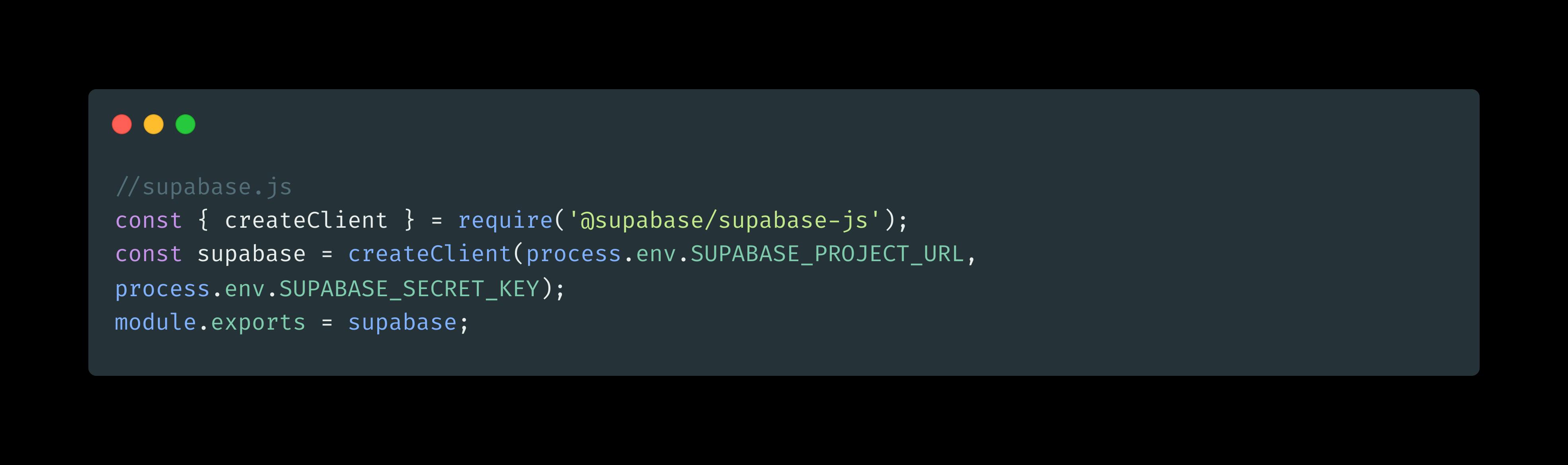

supabase.js

article.txt is the content of the article that we want to search for. So make sure to copy the content of the article there first.

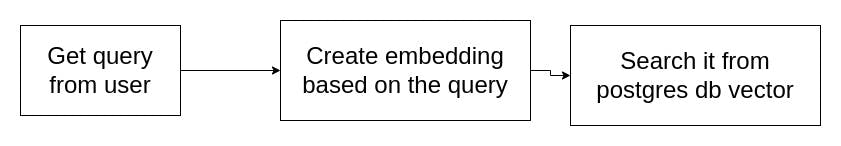

Let's create and store the article chunk's embedding first:

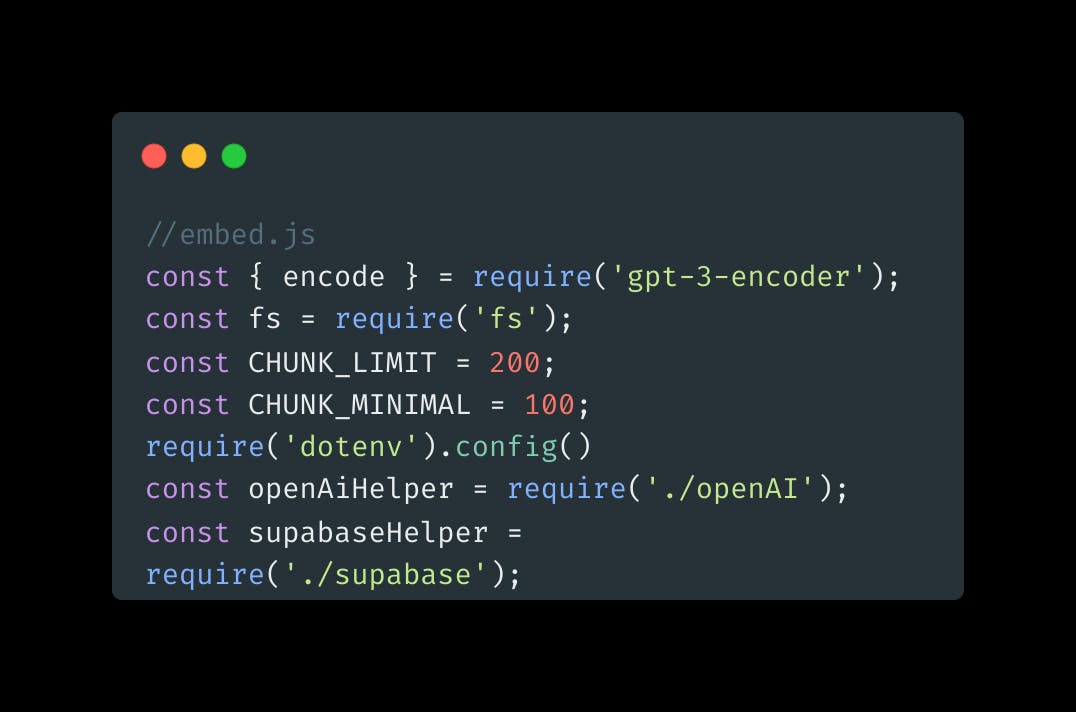

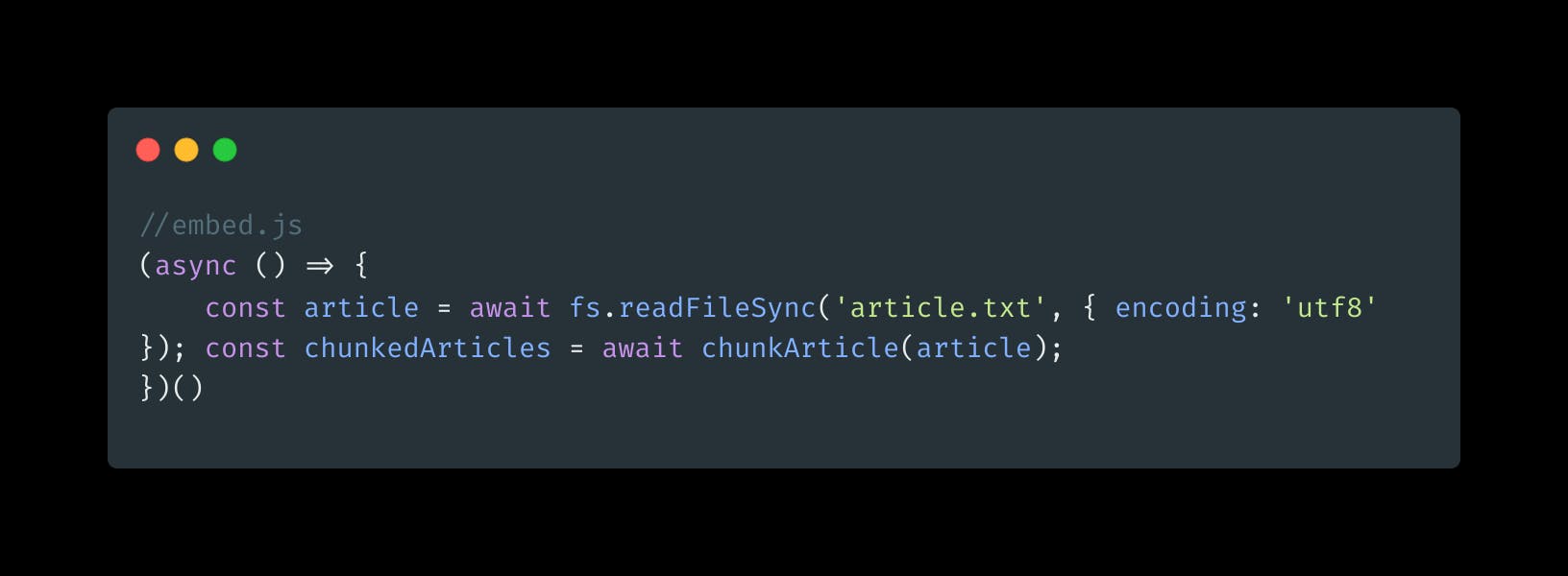

1.Get Article Data

We need to get the text from the article.txt

Before that, let's import the necessary library first.

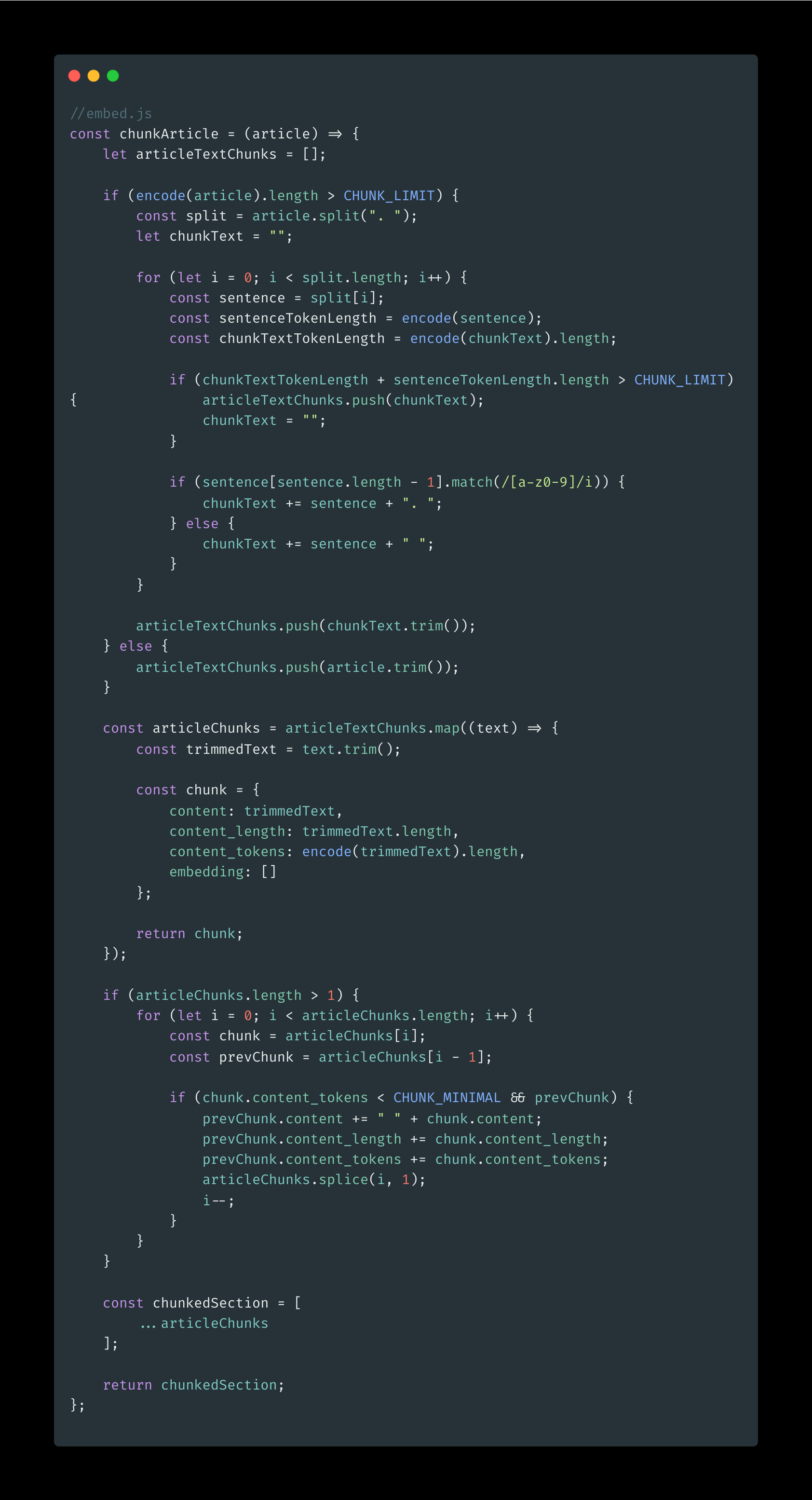

2.Chunk the text

What do we do here?

OpenAI has a token system. 1 token means +- 1 word. What we want now is we chunk the article, and split it into limited CHUNK_LIMIT (200 tokens) each. We chunk it, so we can search for it later.

The function first checks if the length of the encoded article is greater than the CHUNK_LIMIT. If so, it splits the article into individual sentences and then concatenates them into chunks that are less than or equal to the CHUNK_LIMIT. It also ensures that a sentence is not split across different chunks by checking if the last character of a sentence is alphanumeric.

Each chunk is represented as an object with four properties: content (the text content of the chunk), content_length (the length of the text content), content_tokens (the number of tokens in the content), and embedding. embedding will be added later.

If the resulting chunks are smaller than the CHUNK_MINIMAL (100 Tokens ), they are merged with the previous chunk. Finally, the function returns an array of the resulting chunks.

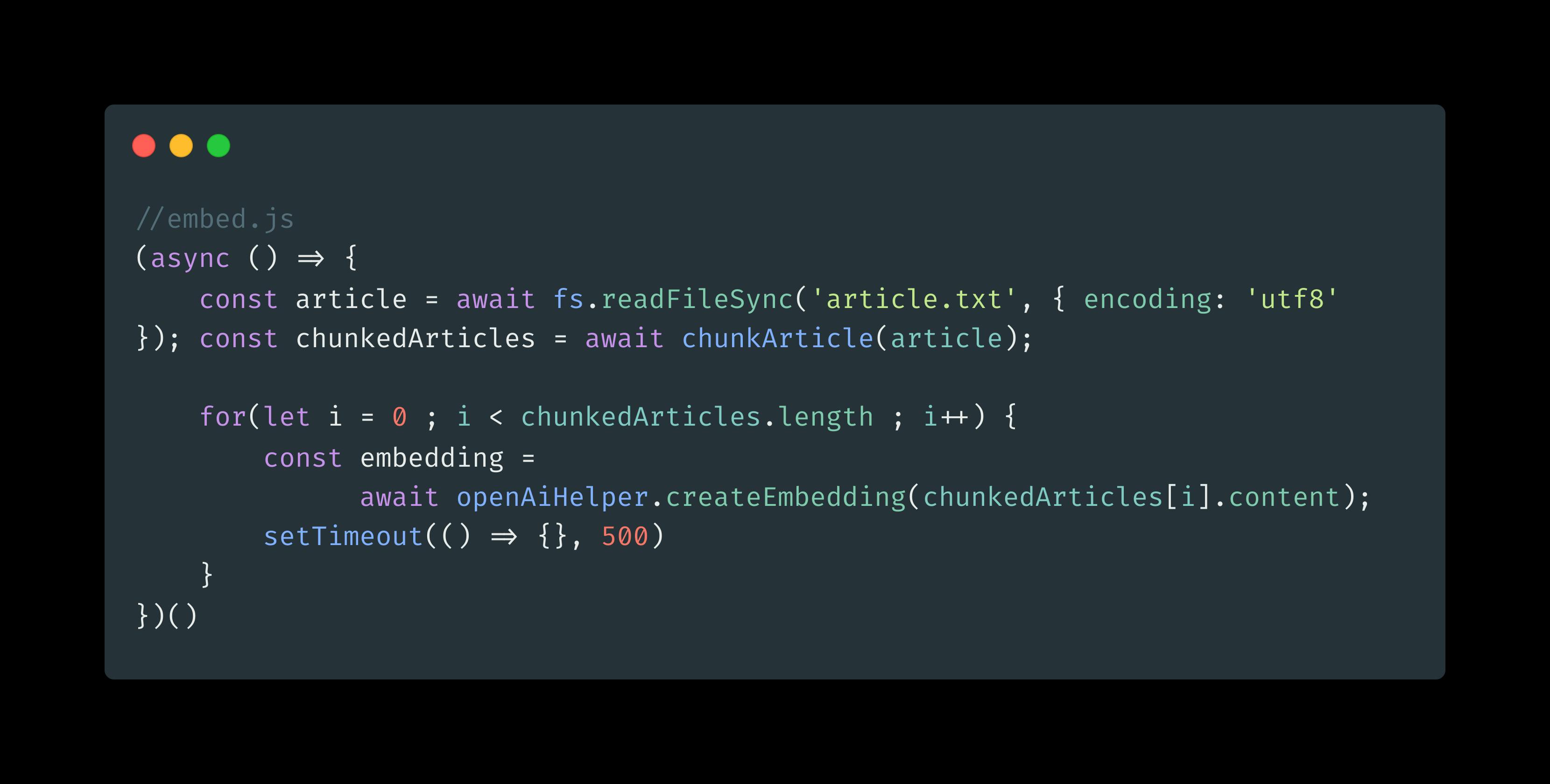

3.Create Chunk's Embedding

const { Configuration, OpenAIApi } = require('openai');

const configuration = new Configuration({

apiKey: process.env.OPENAI_API_KEY,

});

const openAi = new OpenAIApi(configuration);

const createEmbedding = async (input) => {

const embeddingRes = await openAi.createEmbedding({

model: 'text-embedding-ada-002',

input: input

});

const [{embedding}] = embeddingRes.data.data;

return embedding

}

module.exports = {

createEmbedding

}

We need OpenAI's API Create Embedding to create the embedding of the chunked text.

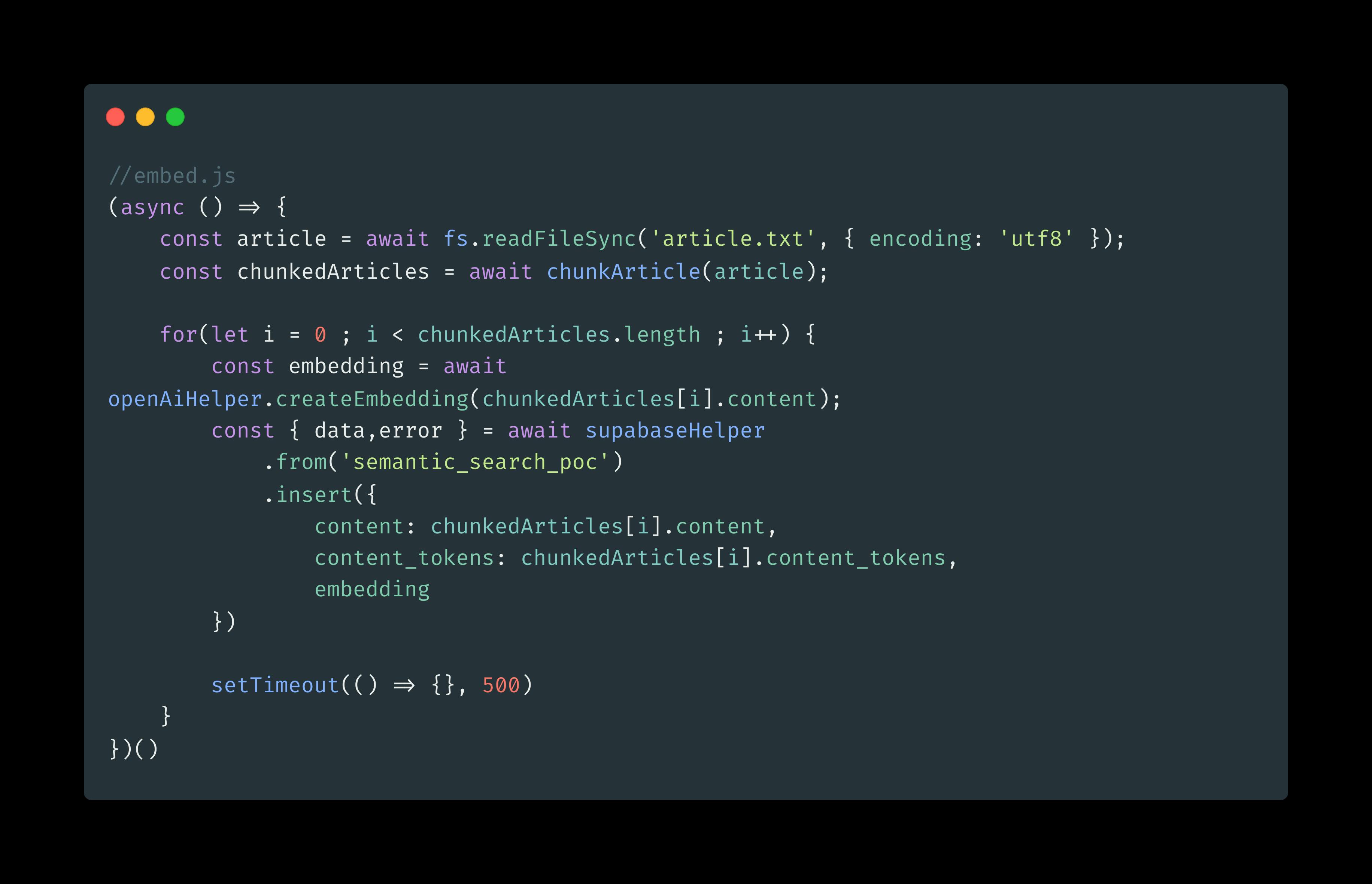

4.Store them in Postgres DB

Before we store them, let's create the table first via the Supabase SQL editor:

create table semantic_search_poc (

id bigserial primary key,

content text,

content_tokens bigint,

embedding vector (1536)

);

You can name it whatever you want. I named it semantic_search_poc so our query will be like this:

Now run the script

node embed.js

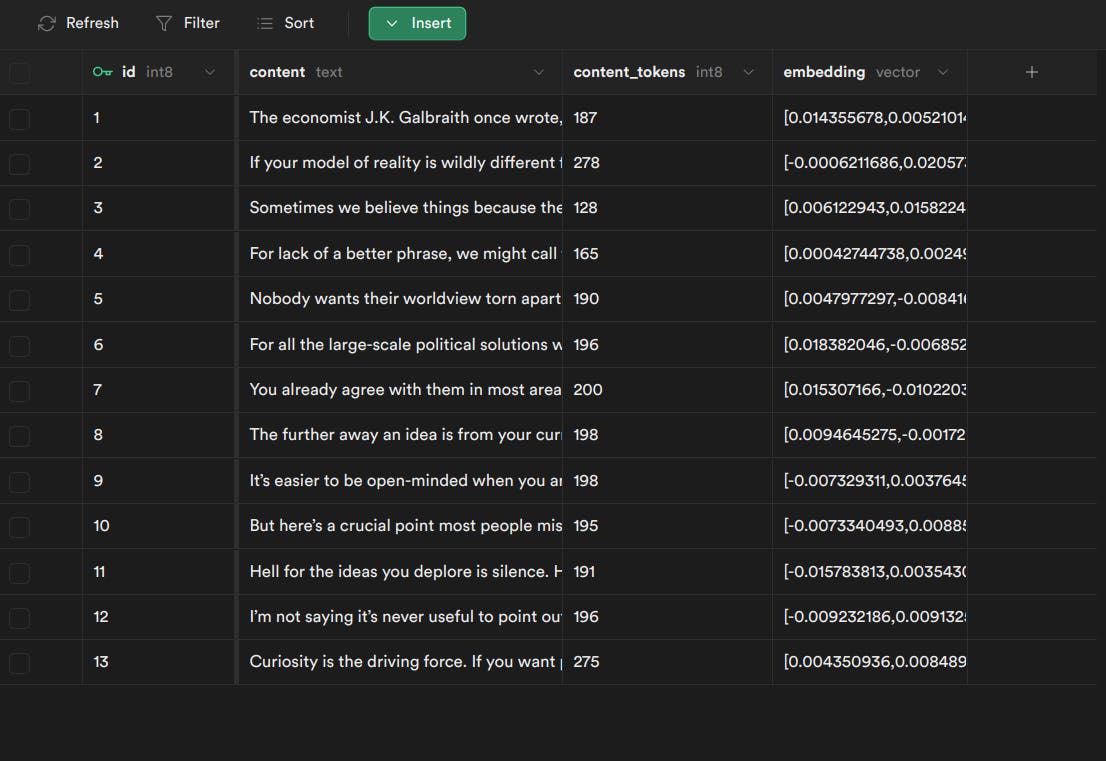

If the process is going smoothly, we will able to see the data in the Supabase table view, something like this:

Done! You have success to build the most crucial part of this project!

Now to the next step, queried the data.

5.Get Query from User

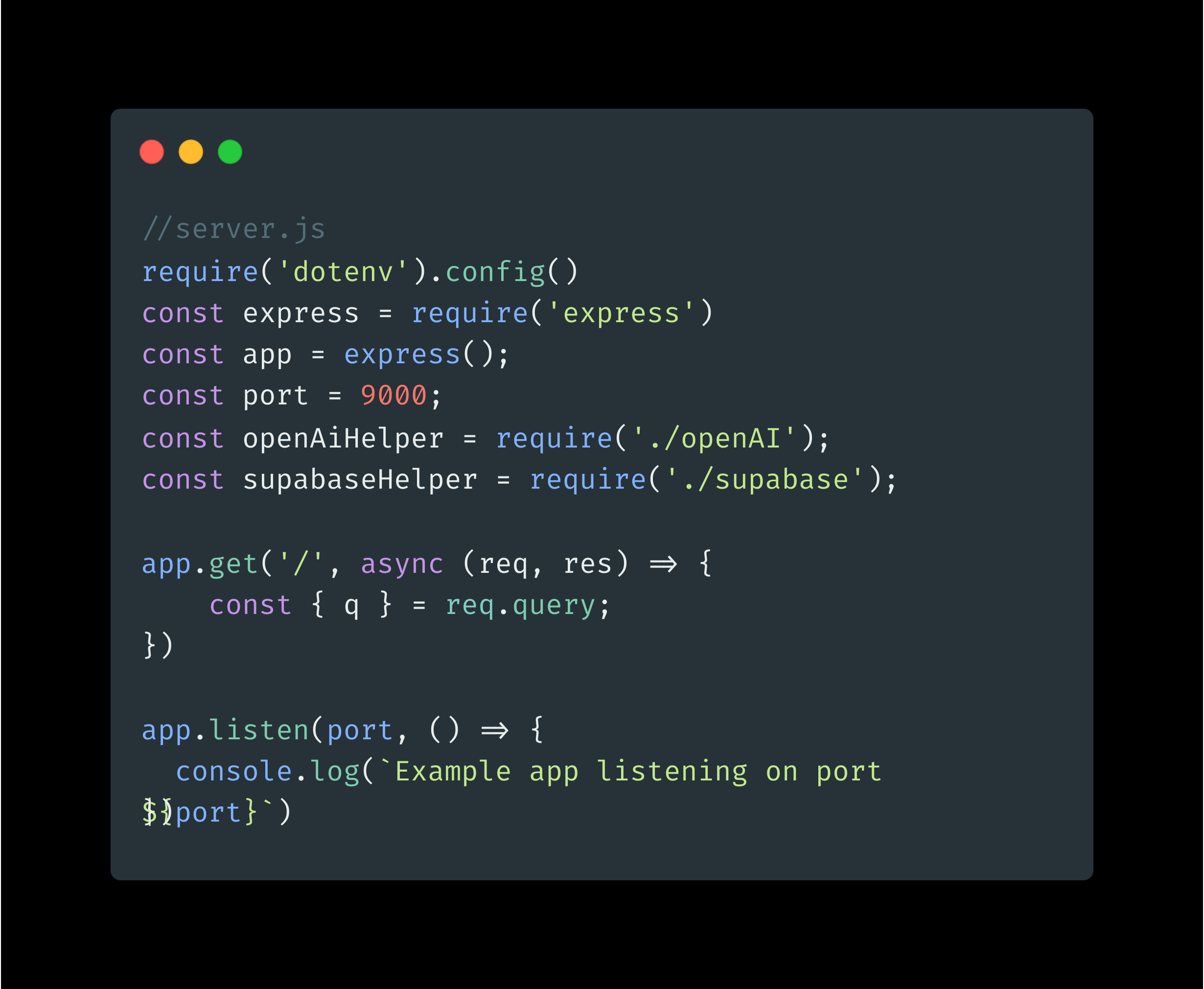

We use Express JS to create Rest API Server to get the query from the user. Create simple Express js server code:

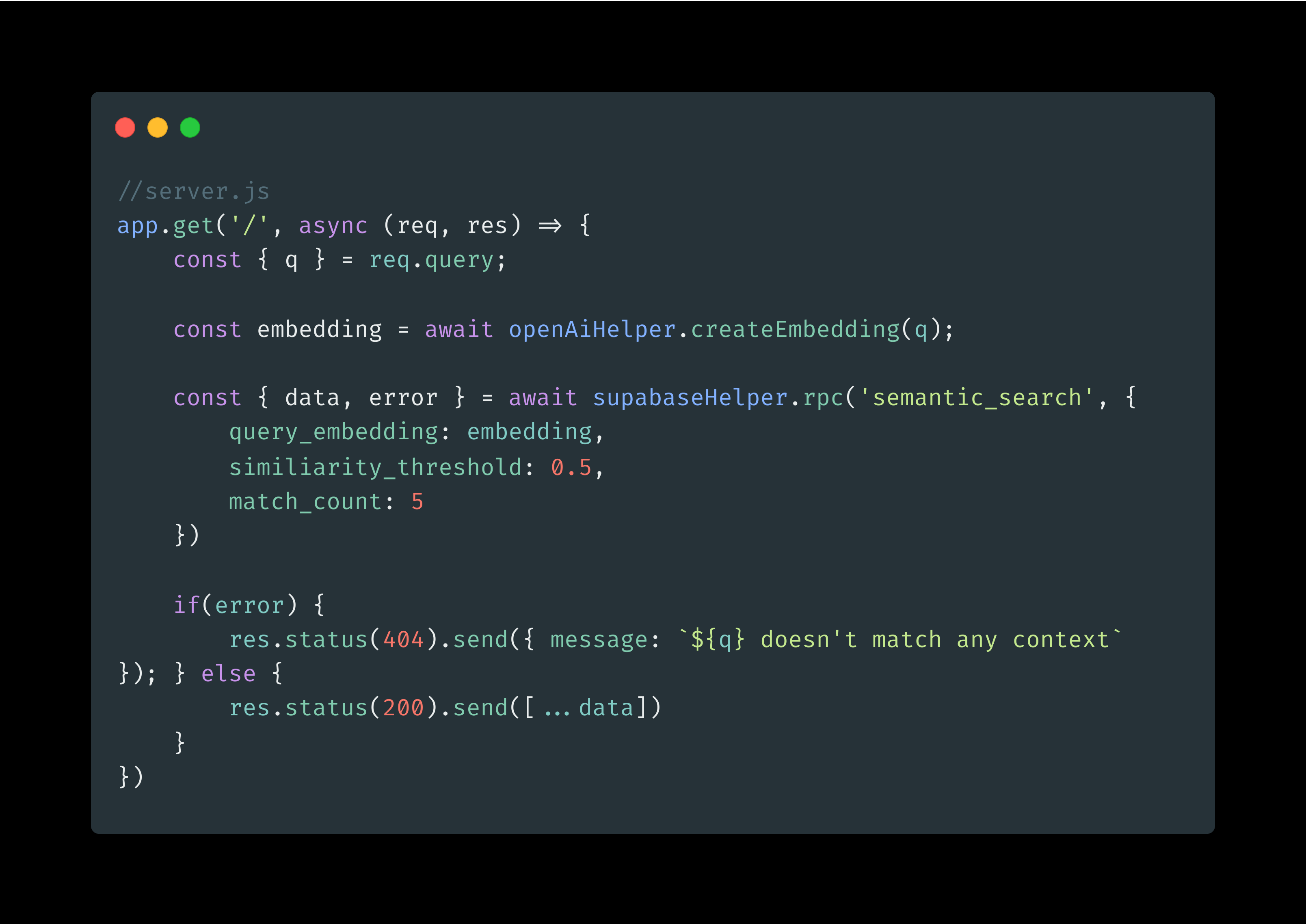

6.Create embedding based on query

//server.js

app.get('/', async (req, res) => {

const { q } = req.query;

const embedding = await openAiHelper.createEmbedding(q);

})

7.Search it in Postgres

We use the Postgres function for this search function. So let's head out to the Supabase function editor.

create or replace function semantic_search (

query_embedding vector(1536),

similiarity_threshold float,

match_count int

)

returns table (

id bigint,

content text,

content_tokens bigint,

similiarity float

)

language plpgsql

as $$

begin

return query

select

semantic_search_poc.id,

semantic_search_poc.content,

semantic_search_poc.content_tokens,

1 - (semantic_search_poc.embedding <=> query_embedding) as similiarity

from semantic_search_poc

where 1 - (semantic_search_poc.embedding <=> query_embedding) > similiarity_threshold

order by semantic_search_poc.embedding <=> query_embedding

limit match_count;

end;

$$;

We create a Postgres script that creates a function called "semantic_search". The function takes in a query embedding, similarity threshold, and match count as inputs and returns a table with columns for ID, content, content tokens, and similarity.

The function uses the "semantic_search_poc" table to find rows where the similarity between the query embedding and the content embedding is greater than the similarity threshold. It then orders the results by similarity and limits the output to the specified match count.

The Postgres function is done, now call it on the code

Run the server:

node server.js

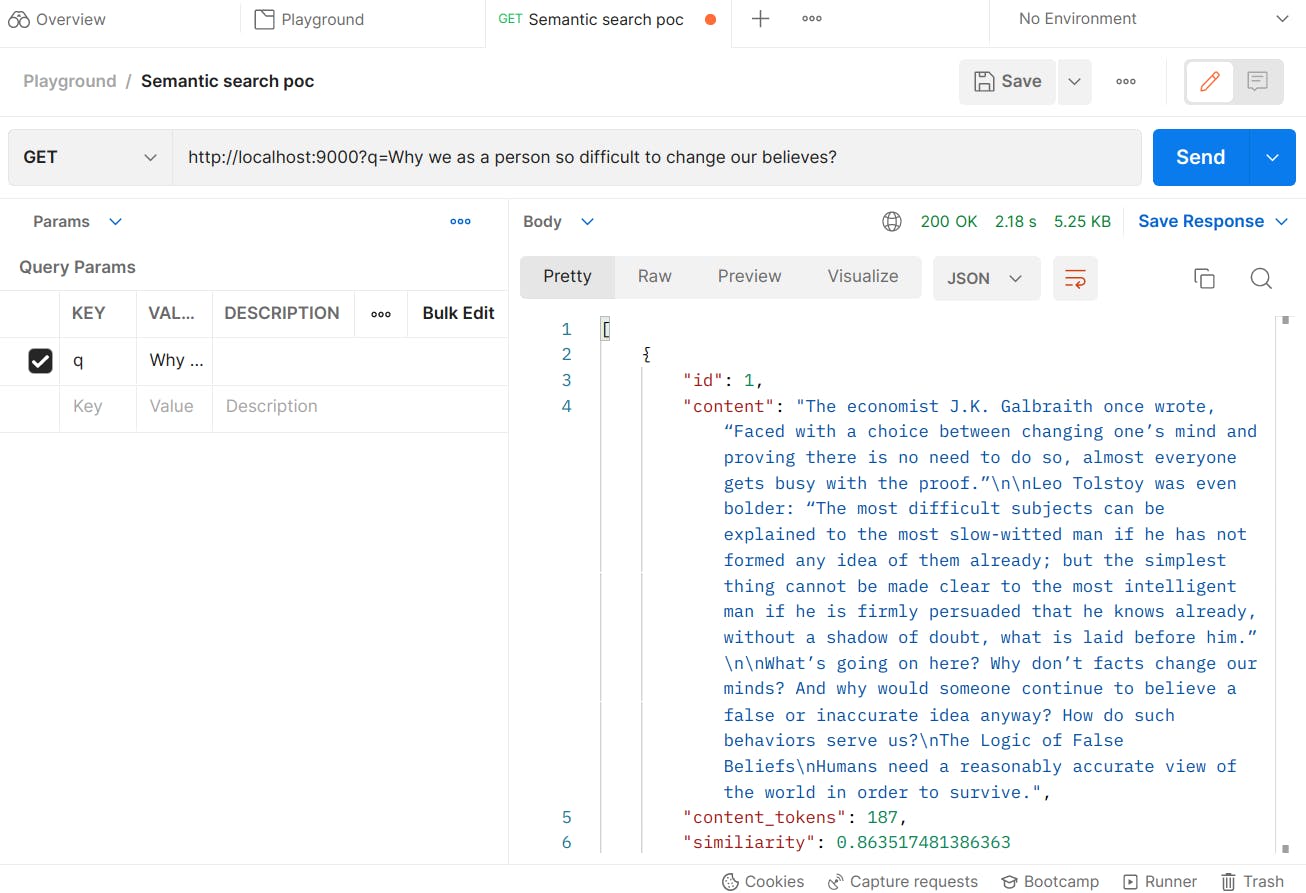

Now try to hit GET / with q params something that you want to ask.

Voila! The API returns the related content ordered by the most similar one.

I hope this article helps to understand how we can create semantic-search apps easily today.

Resources

GitHub for this project: https://github.com/fandyaditya/semantic-search-poc

References: https://github.com/mckaywrigley/paul-graham-gpt